In the final section, we noticed how phrase embeddings could be utilized with the Keras sequential API. While the sequential API is an effective start off line for beginners, because it permits you to rapidly create deep getting to know models, this could be very primary to understand how Keras Functional API works. Most of the superior deep getting to know versions involving a number of inputs and outputs use the Functional API.

Introduction The Keras purposeful API is a solution to create versions which might be extra versatile than the tf.keras.Sequential API. The purposeful API can manage versions with non-linear topology, shared layers, and even a number of inputs or outputs. The foremost concept is that a deep getting to know mannequin is typically a directed acyclic graph of layers. The Keras purposeful API helps create versions which might be extra versatile compared to versions created making use of sequential API. The purposeful API can work with versions which have non-linear topology, can share layers and work with a number of inputs and outputs.

A deep getting to know mannequin is often a directed acyclic graph that comprises a number of layers. The helpful API helps construct the graph of layers. Keras sequential mannequin API is beneficial to create easy neural community architectures with no a lot hassle.

The solely drawback of making use of the Sequential API is that it doesn't permit us to construct Keras fashions with a number of inputs or outputs. Instead, it really is restricted to solely 1 enter tensor and 1 output tensor. Keras Functional API is the second variety of system that permits us to construct neural community fashions with a number of inputs/outputs that additionally possess shared layers. We tried to foretell what number of reposts and likes a information headline on Twitter.

The mannequin can even carry out supervised researching by using two loss functions. Using the principle loss carry out within the mannequin earlier is an effective common process for deep researching models. Convolutional layers are the key constructing blocks utilized in convolutional neural networks. Learning to see and after preparation many various methods of seeing the enter data.

Neural networks play a vital position in machine learning. Inspired by how human brains work, these computational techniques gain knowledge of a relationship between complicated and sometimes non-linear inputs and outputs. A primary neural community consists of an enter layer, a hidden layer and an output layer. Each layer is product of a selected variety of nodes or neurons. Neural networks with many layers are generally referred to as deep gaining knowledge of systems. The principal perform for employing the Functional API is known as keras_model().

With keras_model, you mix enter and output layers. To make it extra straightforward to understand, let's take a look at an easy example. Below, I'll be constructing the identical mannequin from final week's blogpost, the place I educated a picture classification mannequin with keras_model_sequential. Just that now, I'll be employing the Functional API instead. The Keras purposeful API is a technique to create versions which might be extra versatile than the tf. Sequential API. The purposeful API can deal with versions with non-linear topology, shared layers, and even a number of inputs or outputs.

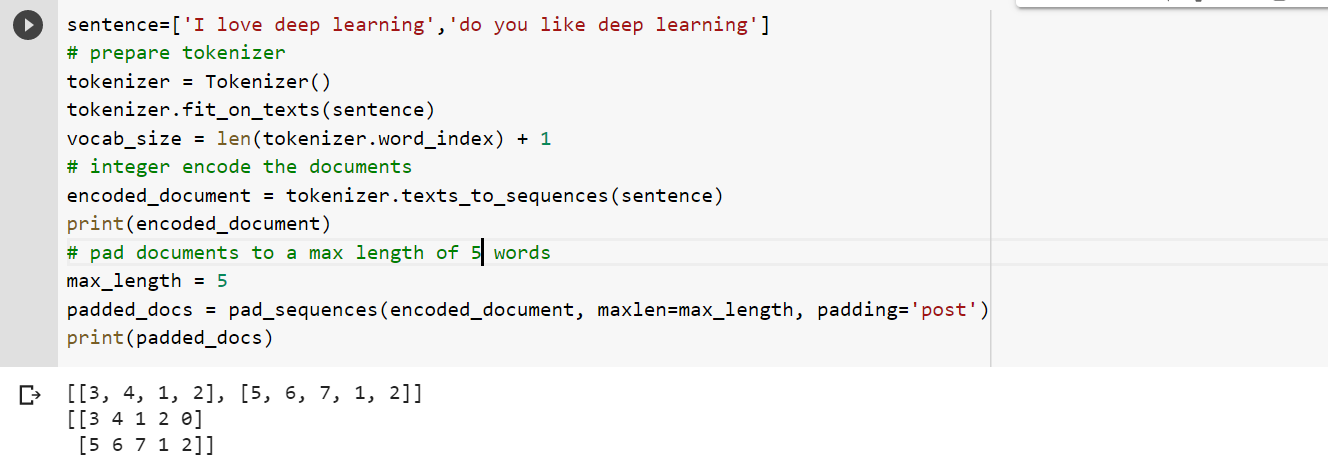

So the practical API is a option to construct graphs of layers. We can create an easy Keras mannequin by simply including an embedding layer. In the above example, we're setting 10 because the vocabulary size, as we'll be encoding numbers zero to 9. We need the measurement of the phrase vector to be 4, consequently output_dim is about to 4.

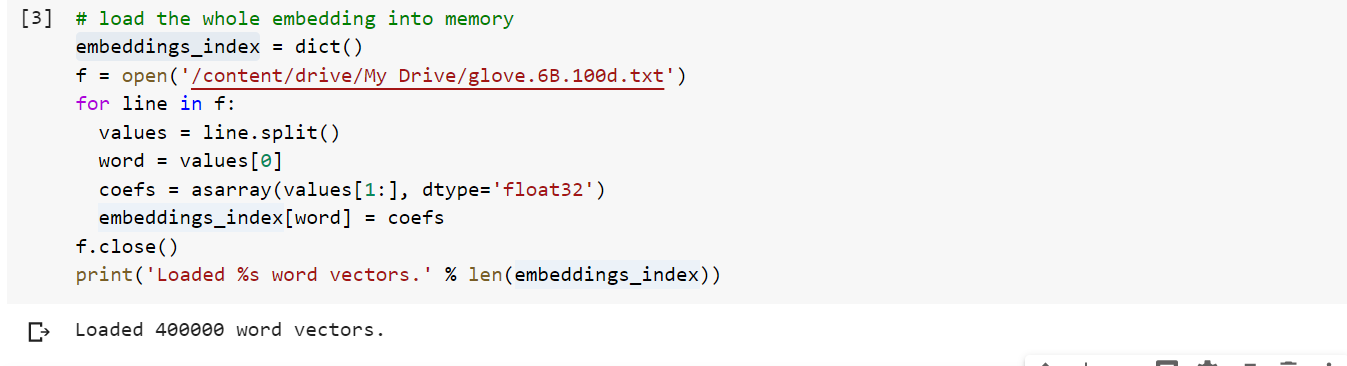

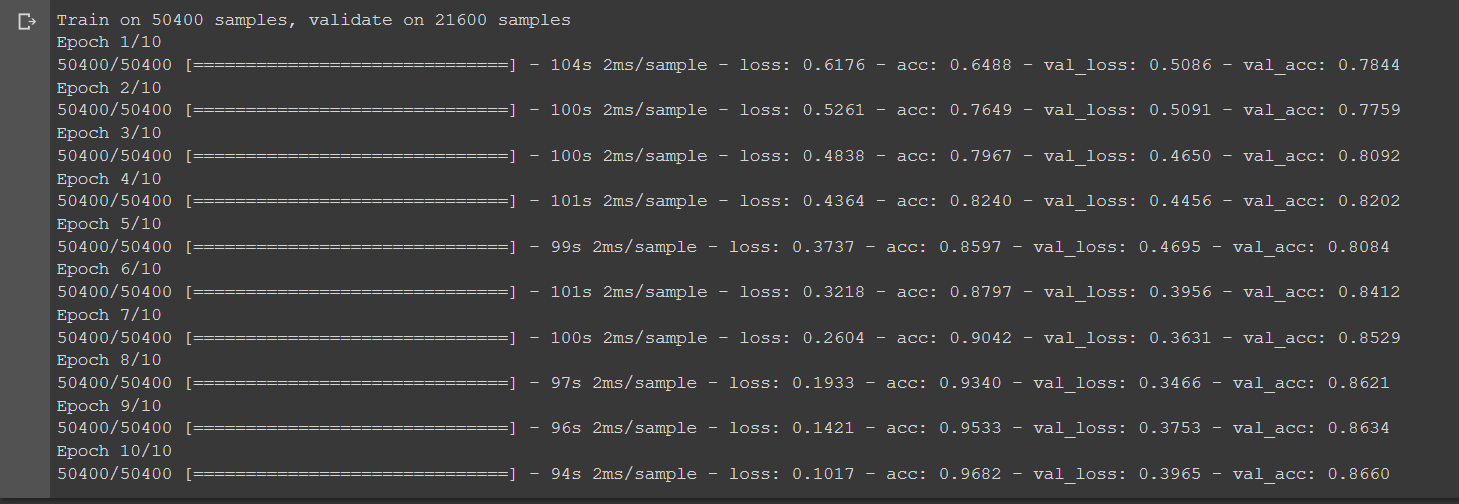

The size of the enter sequence to embedding layer can be 2. Keras purposeful API makes it possible for us to construct every layer granularly, with half or all the inputs instantly related to the output layer and the power to attach any layer to some different layers. Features like concatenating values, sharing layers, branching layers, and supplying a number of inputs and outputs are the strongest rationale to select the purposeful api over sequential. I had created keras deep studying mannequin with glove phrase embedding on imdb film set. I had used 50 dimension however for the period of validation there's lot of hole between accuracy of coaching and validation data.

I even have finished pre-processing very fastidiously making use of customized strategy however nonetheless I am getting overfitting. You must be now conversant in phrase embeddings, why they're useful, and in addition easy methods to make use of pretrained phrase embeddings in your training. You have additionally discovered easy methods to work with neural networks and the way to make use of hyperparameter optimization to squeeze extra efficiency out of your model.

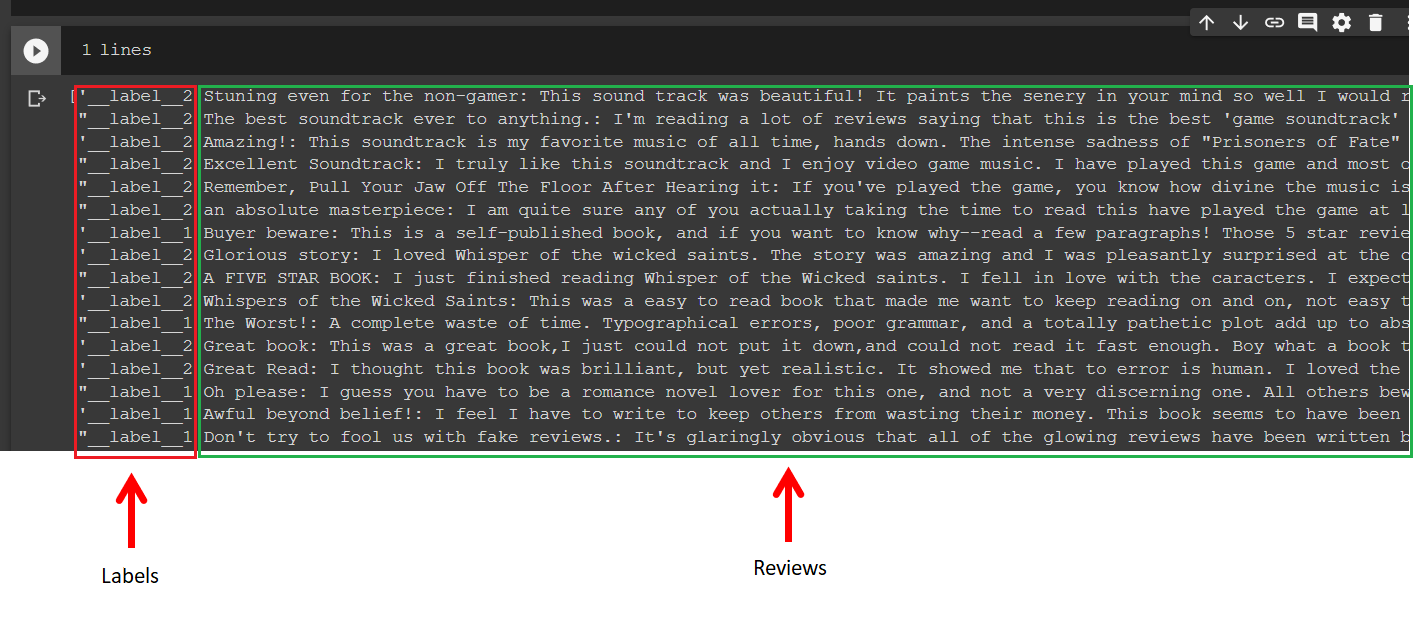

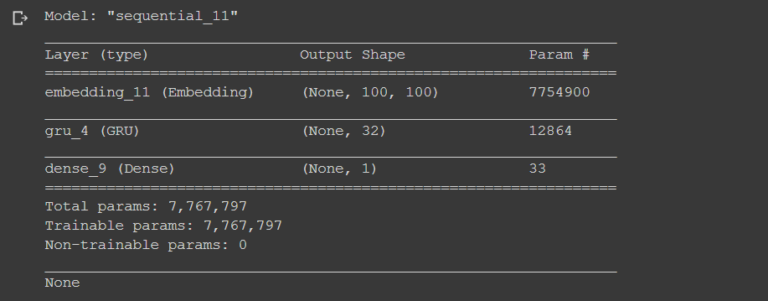

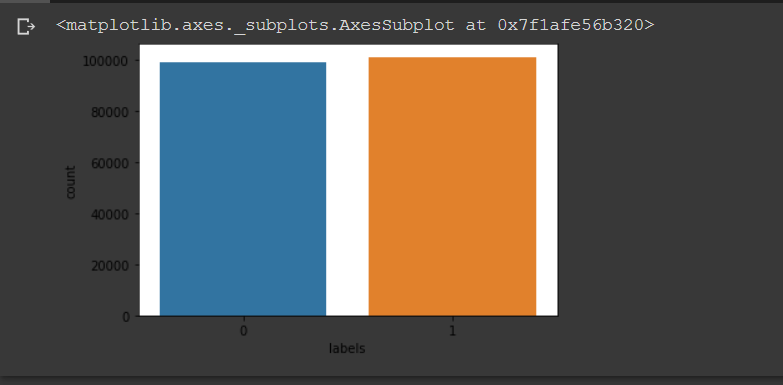

Keras Functional Api Embedding Layer In the script above, we create a Sequential mannequin and add the Embedding layer because the primary layer to the model. The size of the vocabulary is specified by the vocab_length parameter. The dimension of every phrase vector might be 20 and the input_length would be the size of the longest sentence, which is 7. Next, the Embedding layer is flattened in order that it usually is immediately used with the densely related layer. Since it's a binary classification problem, we use the sigmoid operate because the loss operate on the dense layer.

Firstly thanks such a lot for the good webpage you have. It has been an exceptional aid from the primary day I began to work on deep learning. What is the corresponding loss perform for the mannequin with a number of inputs and one output that you've in subsection Multiple Input Model? I need to know when you could have X1 and X2 as inputs and Y as outputs, what can be the mathematical expression for the loss function. This lets you design superior neural networks for complicated issues however would require some researching curve as well. Both the headline, which is a sequence of words, and an auxiliary enter might be given to the mannequin that accepts data, for example, at what time or the date the headline obtained posted, etc.

Now you're able to make use of the embedding matrix in training. Let's go forward and use the past community with international max pooling and see if we will toughen this model. When you employ pretrained phrase embeddings you've got the selection to both enable the embedding to be up to date throughout preparation or solely use the ensuing embedding vectors as they are. To use textual content facts as enter to the deep mastering model, we have to transform textual content to numbers.

However in contrast to machine mastering models, passing sparse vector of giant sizes can greately impact deep mastering models. Therefore, we have to transform our textual content to small dense vectors. Word embeddings aid us convert textual content to dense vectors. The script stays the same, aside from the embedding layer.

Here within the embedding layer, the primary parameter is the dimensions of the vacabulary. The second parameter is the vector dimension of the output vector. Since we're utilizing pretrained phrase embeddings that include one hundred dimensional vector, we set the vector dimension to 100. You can see that the primary layer has one thousand trainable parameters. This is for the reason that our vocabulary measurement is 50 and every phrase might be introduced as a 20 dimensional vector.

Hence the overall variety of trainable parameters can be 1000. Similarly, the output from the embedding layer can be a sentence with 7 phrases the place every phrase is represented by a 20 dimensional vector. However, when the 2D output is flattened, we get a one hundred forty dimensional vector . The flattened vector is instantly related to the dense layer that accommodates 1 neuran.

You can add extra layers and extra neurons for predicting the 2nd output. This is the place the useful API wins over the sequential API, due to pliability it offers. Using this we will predict a number of outputs on the identical time.

We would have constructed 2 totally different neural networks to foretell outputs y1 and y2 applying sequential API however the practical API enabled us to foretell two outputs in a single network. We don't have a lot manage over input, output, or movement in a sequential model. Sequential fashions are incapable of sharing layers or branching of layers, and, also, can't have a number of inputs or outputs.

If we wish to work with a number of inputs and outputs, then we need to use the Keras purposeful API. The mannequin takes black and white pictures with the dimensions 64×64 pixels. There are two CNN function extraction submodels that share this input; the primary has a kernel measurement of four and the second a kernel measurement of 8. In addition to those rigorously designed methods, a phrase embedding might be discovered as section of a deep gaining knowledge of model. This is usually a slower approach, however tailors the mannequin to a selected coaching dataset. The Keras purposeful API is a technique to create versions which are extra versatile than the tf.keras.Sequential API.

Model class Keras useful API is a technique for outlining complicated versions (such as multi-output models, directed acyclic graphs, or versions with shared layers) Fully related community T... In this tutorial, you'll see tips on how to focus on representing phrases as vectors which is the generic system to make use of textual content in neural networks. Two achievable methods to symbolize a phrase as a vector are one-hot encoding and phrase embeddings. In order to enter to the machine getting to know model, it really is important to quantify the loaded character string info in some way. Bag of phrases and one-hot encoding of every phrase are well-known methods.

This time I want to make use of the embedding layer of keras firstly of the network, so I assign a phrase ID to the phrase and convert it to a column of phrase ID. Another well-known use-case for the Functional API is constructing fashions with a number of inputs or a number of outputs. Here, I'll present an instance with one enter and a number of outputs. Note, the instance under is certainly fabricated, however I simply want to illustrate ways to construct an easy and small model. Most real-world examples are a lot greater when it comes to statistics and computing assets needed.

You can discover some good examples right right here and right right here (albeit in Python, however you'll discover that the Keras code and performance names look very nearly similar to in R). This additionally can't be dealt with with the Sequential API . In this text we noticed how phrase embeddings could very effectively be carried out with Keras deep getting to know library. We carried out the customized phrase embeddings in addition to used pretrained phrase embedddings to unravel undemanding classification task.

Finally, we additionally noticed the way to implement phrase embeddings with Keras Functional API. In Keras, we create neural networks equally applying operate API or sequential API. In equally the APIs, it can be vitally challenging to organize files for the enter layer to model, mainly for RNN and LSTM models. This is due to various measurement of the enter sequence. There are several completely different embedding vector sizes, consisting of 50, 100, 200 and 300 dimensions. You can obtain this assortment of embeddings and we will seed the Keras Embedding layer with weights from the pre-trained embedding for the phrases in your guidance dataset.

Real-life issues should not sequential or homogenous in form. You will in all likelihood should include a number of inputs and outputs into your deep mastering mannequin in practice. How to outline extra complicated fashions with shared layers and a number of inputs and outputs. One means is to coach your phrase embeddings in the course of the coaching of your neural network. The different means is through the use of pretrained phrase embeddings which you'll be able to instantly use in your model.

There you might have the choice to both depart these phrase embeddings unchanged throughout coaching otherwise you practice them also. This way represents phrases as dense phrase vectors that are educated in contrast to the one-hot encoding that are hardcoded. This signifies that the phrase embeddings accumulate extra facts into fewer dimensions.

When you're employed with machine learning, one vital step is to outline a baseline model. This customarily includes an easy model, which is then used as a comparability with the extra superior versions that you simply really wish to test. In this case, you'll use the baseline mannequin to match it to the extra superior strategies involving neural networks, the meat and potatoes of this tutorial. Examples embrace an Auxiliary Classifier Generative Adversarial Network and neural fashion transfer. For many models, keras_model_sequential is sufficient! For example, it may be utilized to construct elementary MLPs, CNNs and LSTMs.

To get the variety of distinctive phrases within the text, you can actually in simple terms matter the size of word_index dictionary of the word_tokenizer object. This is to shop the size for the phrases for which no pretrained phrase embeddings exist. In the practical API, fashions are created by specifying their inputs and outputs in a graph of layers. That signifies that a single graph of layers might be utilized to generate a wide variety of models.

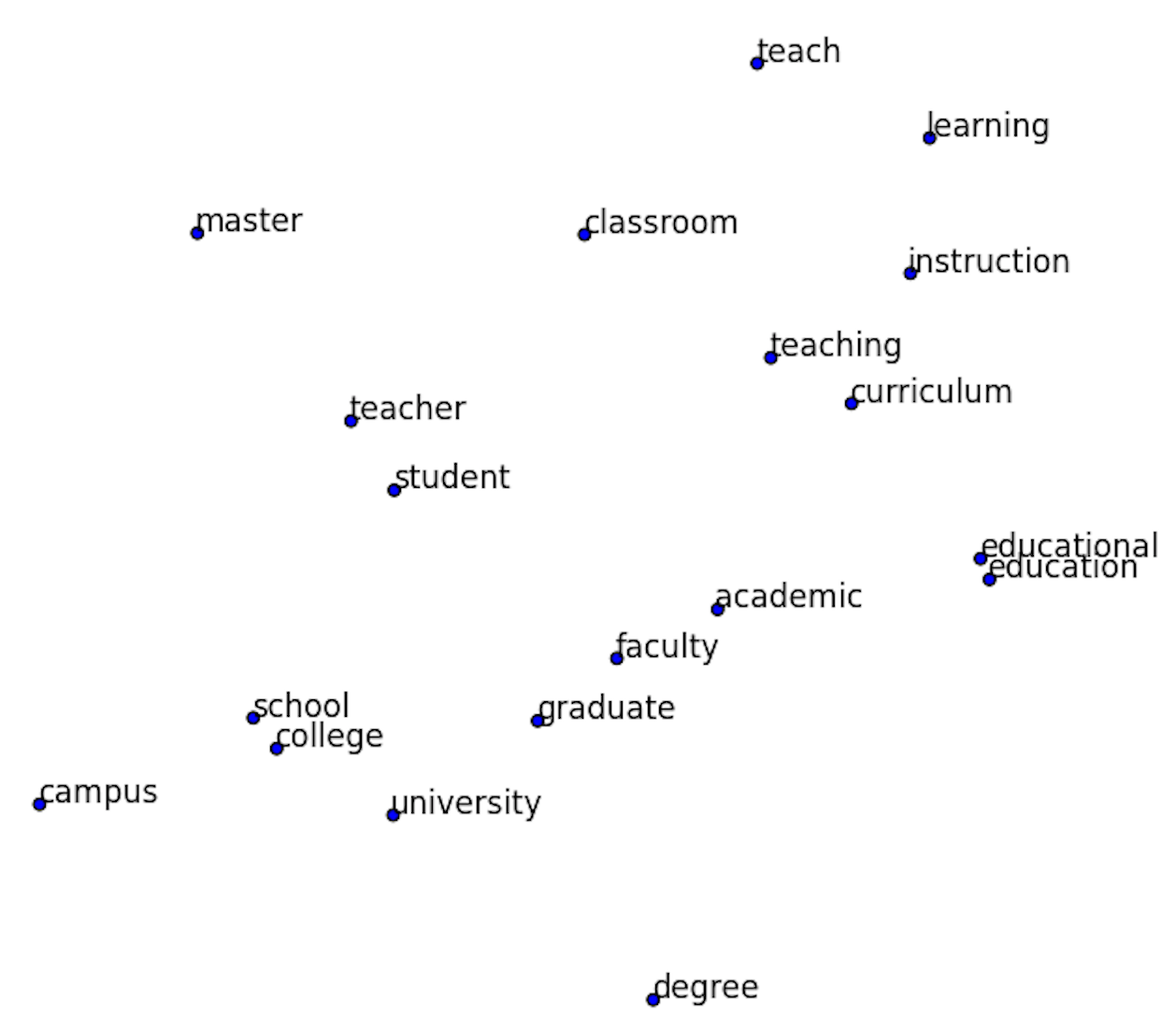

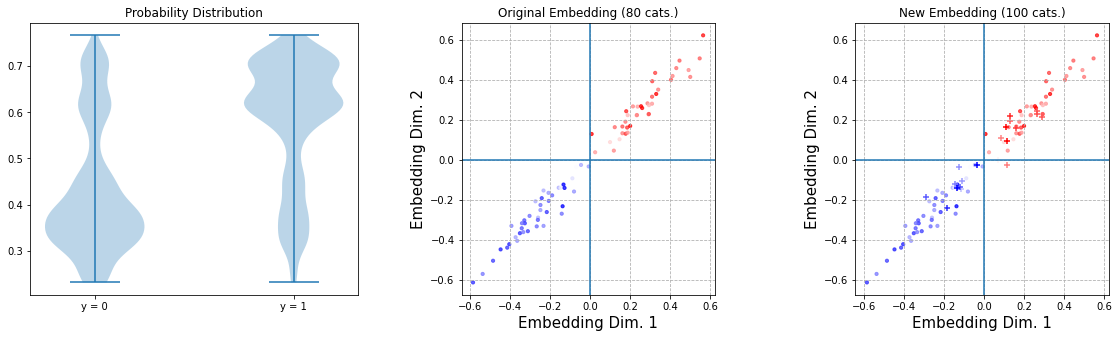

Keras Functional API is used to delineate complicated models, for example, multi-output models, directed acyclic models, or graphs with shared layers. In different words, it may be reported that the purposeful API allows you to define these inputs or outputs which might be sharing layers. For example, in case your community is educated to do sentiment classification, it'll in all probability simply group/cluster phrases within the embedding in line with their "emotional" load.

Nevertheless, primarily based on my expertise it's usually helpful to initialize your embedding layer with weights discovered by word2vec on an enormous corpus. The first values characterize the index within the vocabulary as you've got discovered from the earlier examples. You can even see that the ensuing function vector accommodates principally zeros, because you've got a reasonably brief sentence. In the subsequent half you'll notice easy methods to work with phrase embeddings in Keras. Functional expression API You can use it to construct a mannequin with a number of inputs . Multiple enter fashions , Usually, at some point, distinct branches are mixed with a layer which could mix tensors .

Combinatorial tensor , You can add 、 Connections etc. , keep keras Provides facts corresponding to keras.layers.add、keras.layers.concatenate And so on . This tutorial consists of an introduction to phrase embeddings. You will practice from tensorflow.keras.layers.experimental.preprocessing import TextVectorization. In this text we cowl the 2 phrase embeddings in NLP Word2vec and GloVe. And favorite Natural Language Processing NLP utilizing Python course! Most realworld issues include a dataset that has an oversized quantity of uncommon words.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.